Stream Eddies

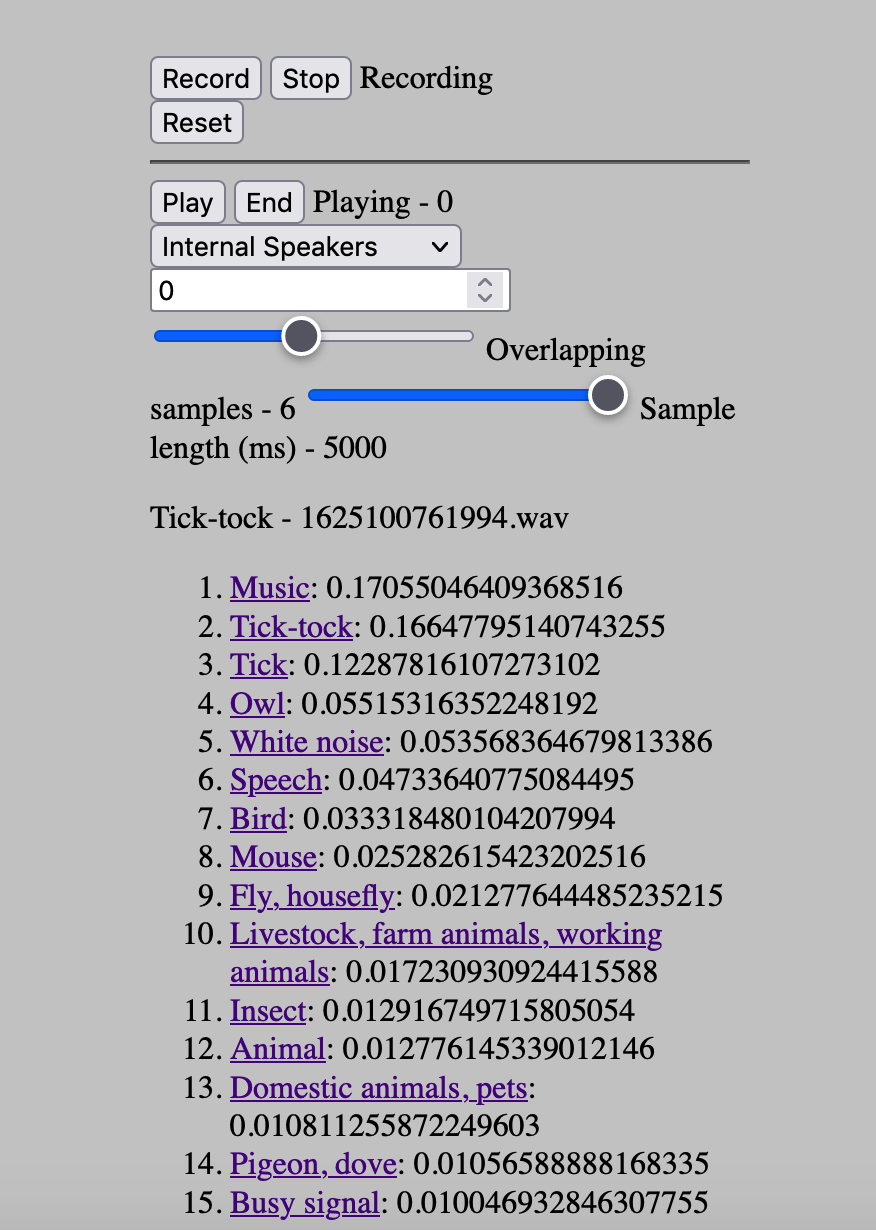

Stream eddies is a granular sound regurgitator that uses a machine learning audio classifier to sort and select samples. Similarly to other granular effects like Clouds/Beads and streamStretch~, this process aims to take an ongoing but non-uniform source signal and give it a somewhat denser consistency. Stream eddies works by chopping the incoming sound into 5-second samples, running the classifier on each sample, and then semi-randomly choosing samples to play back with bias toward the classification values it has received most so far. For example, if after 2 minutes of input the classifier has returned “trombone” more often than any other label, the sample that plays back most frequently will be the one that the machine learning analyzer has deemed the most trombone-like.

Instructions for running stream eddies are attached to its code repository.

The motivation for stream eddies comes through thinking about streaming-first (instead of recording-first) approaches to working with sound. A challenge when working with streams (by which I mean ongoing sound sources, e.g. a radio or a livestream of birds) is that you might want to be able to tap into, at any point in time, a given source and have it sound like that source. That is, the birds may only be vocalizing a third of the time, leaving you with only silence or background noise when you might expect to be able to access a “bird” sound. Time-stretching and texture synthesizing are good ways of removing momentary silences from a signal, but they don’t necessarily aim to amplify its essence. Stream eddies tries out a naive idea that automatic classification might be able to detect and then distill that kind of essence by repeating the most characteristic sounds most often. Of course, the logic that the classifier imposes on the sonic world distorts and narrows it dramatically – in the case of this Google/IBM classifier, the taxonomy of labels and the training models come exclusively from an arbitrary set of YouTube videos. The software, then, while fairly effective in condensing a sparse incoming signal into more readily available sound, is more interesting as a way to listen to machine listening in all its confused and reductive fervor.

I riffed on stream eddies in a contribution to Flitr, a research initiative by cached.media. (Many thanks to M. Sage for the inclusion.)

Here is an example of stream eddies processing a slowly station-hopping FM radio in southwestern Ireland in 2019:

… a short example scanning the Montreal FM band (also 2019):

… and an example where the input signal started with YouTube radio channel “Lo-Fi Beats to Study and Relax to” and switched to a very generic TED Talk: