-

Medium metadiscourse on Mastodon and in radio

It’s been very fun and heartening in the last week to see a big wave of people I followed on Twitter moving over to open source federated alternatives, namely Mastodon (or its fork Hometown, in my case). There are a lot of good blog posts making the rounds where people offer tips on getting oriented in the fediverse and reflections on its differences from Twitter; this is not one of those. Rather, I want to reflect on this present and probably brief period where such a huge portion of what people are posting on Mastodon is about Mastodon – this moment of lively medium metadiscourse as people help each other explore a new kind of communication channel.

As a radio historian, it’s fun to imagine this kind of moment as a small and very distant window into how radio operators in the first two decades of the twentieth century would have related to each other and to the medium they were trying to define. As Susan Douglas documented in Inventing American Broadcasting, amateur operators held club meetings over the airwaves where they would tackle technical problems and share aspirations for what widespread radio adoption in the United States might look like.

This is not to say that we should glamorize medium metadiscourse. Douglas’s book shows how a specific complex of white, middle-class masculinity, bolstered by hero-inventor stories, propelled these amateur operators. Today we can look to “web3” for similar forms of entrepreneurship fetishism in an aggressive and grift-pervaded medium metadiscourse whose medium, in most cases I’ve seen, doesn’t even exist yet.

My corners of the fediverse have mercifully little incursion from web3 or its driving interests. Even so, the present levels of medium metadiscourse there are unsustainable – people can only stay interested in the nuances of cross-instance hashtag propagation for so long. Mastodon’s test will be (or rather has been; at small scales relative to Twitter, it has been succeeding for years) how many people ride each platform migration wave to a place where they feel settled and comfortable with a group of people they can and want to talk with. If, when placing a phone call in 1900, you still thought more about the operation of automatic switchboards than about what you planned to say to the person you were calling, then the telephone would not have felt like a stable or friendly medium.

The upside, in my view, is that these moments are when media are at their least transparent, in Lisa Gitelman’s terms. “The technology and all its supporting protocols” (both technical standards and pieces of etiquette like answering the phone with “hello”) have not yet “become self-evident as the result of social processes” (Always Already New, p. 6). The “success” of the medium will depend on the eventual capacity for its users to become inattentive to those protocols in favor of the “content” that they help transmit. But in these more opaque moments, infrastructural curiosity is closer to hand, as are exciting potentials for differentiation from other media (as can be seen in expressions about how Mastodon isn’t and shouldn’t become just like Twitter).

The twist I’d add, following from a few years of researching American radio since 1950, is that metadiscursive moments are not unique to a medium’s early years. Radio today features a lot more emphasis on radio itself than it seems to have featured during what’s often called its heyday. This emphasis today tends to be nostalgic or defensive, assuring listeners of the medium’s advantages over newer kinds of channels, rather than exploratory; but I don’t think it’s incompatible with a kind of return to that sense of openness, as a 21st-century flourishing in experimental radio arts has shown.

One narrative frame I’m testing out as a generalization from radio automation’s history goes something like this:

- A medium is emergent; its socio-technical protocols are open to and in need of definition

- Protocols stabilize to a point that they become “transparent;” they succeed as a medium

- Protocols and routines become so stable that many of them can be automated

- A feedback loop of automation and capitalist predation can begin to strip out the internal work that differentiates meaningful channels within the medium, increasing its homogeneity

- Users/audiences and capitalists increasingly abandon the medium

- The medium’s protocols and resources are left under-used and once again appear available for exploratory uses

This process has taken a century in broadcast radio, but I believe we can map it more or less cleanly onto internet media at much shorter time scales. Anxieties about automation (“bots” and “the algorithm”) have been pervasive on platforms like Twitter for some time; one way to read them is that the social protocols for expression in these venues have become so standardized that they can be convincingly automated, and it grows less easy to tell an automated speaker apart from a “real” one. If I am right that high levels of medium metadiscourse characterize either end of the above cycle, then it will be interesting to see how Twitter users continue to talk about its transformation under Elon Musk’s private ownership; but it will be much more exciting to watch what comes of the current flurry of general eagerness to understand and adjust protocols for the federated social web. -

On finding a new text editor

I’ve spent an unreasonable amount of time this summer evaluating a few different text editors after Atom, the application where I did almost all my writing and notetaking between 2016 and 2022, got the axe from its corporate developers. I’m writing this post to capture my reflections from this largely procastination-fueled quest for the right editor before those thoughts vanish on the wind. Maybe it can also serve as a kind of Wirecutter-ish tour through some choices for people in a similar boat – that is, humanities researchers who use or might want to use a plain text workflow instead of a word processor. The choice I ultimately (and unexpectedly) arrived at was to use gedit, which was simply the built-in text editor on my operating system (Ubuntu Linux – gedit is also available for Mac OS, though I haven’t tried it there yet). People on other operating systems, especially those who already use Atom (edit December 2022: I just learned there’s a fork for ongoing maintenance of what was Atom as Pulsar; I haven’t tried it out but it might be where you want to look first if you were an Atom enthusiast), might want to go with Codium or Sublime Text, or something like StackEdit if you don’t mind working in a web browser. But gedit’s simplicity and built-in-ness were just as important to me as its features, so this post is also a defense of starting out with whatever text editor you have on hand.

Just before starting a PhD program in 2018, I happened upon Scott Selisker’s “Plain Text Workflow for Academic Writing with Atom.” It was a lucky and timely find: I had used Scrivener (the writing environment that provided some inspiration for Selisker’s workflow) to draft my MS thesis a few years prior and had used Atom nearly every weekday since then during a job as a digital humanities developer. Working on websites and code documentation for that job meant I was already familiar with markdown, the markup language that Selisker uses (this post and most content on my website are also written in markdown). Heading back into school, I was eager to set up a workflow that combined what I liked about these tools and that could help me stay organized amid an onslaught of reading. I also borrowed part of my notetaking approach from the design of a specialized markdown notes editor called The Archive. This category of markdown-based tools for researchers has kept blooming a bit in the time since, e.g. with the mind-mapping application Obsidian or Zettlr. Other tools for academic writing popped up as packages (i.e. plugins, extensions) for Atom, including nice integrations with the citation manager Zotero. Since I knew some Javascript, I could make Atom packages of my own to do simple time-saving things like clean up messy text blobs on their way over from PDFs I was reading. Atom’s package repository showed that a bunch of other people installed that one, which made it feel like I was in a loose but sizeable community of people using the application for research and writing.

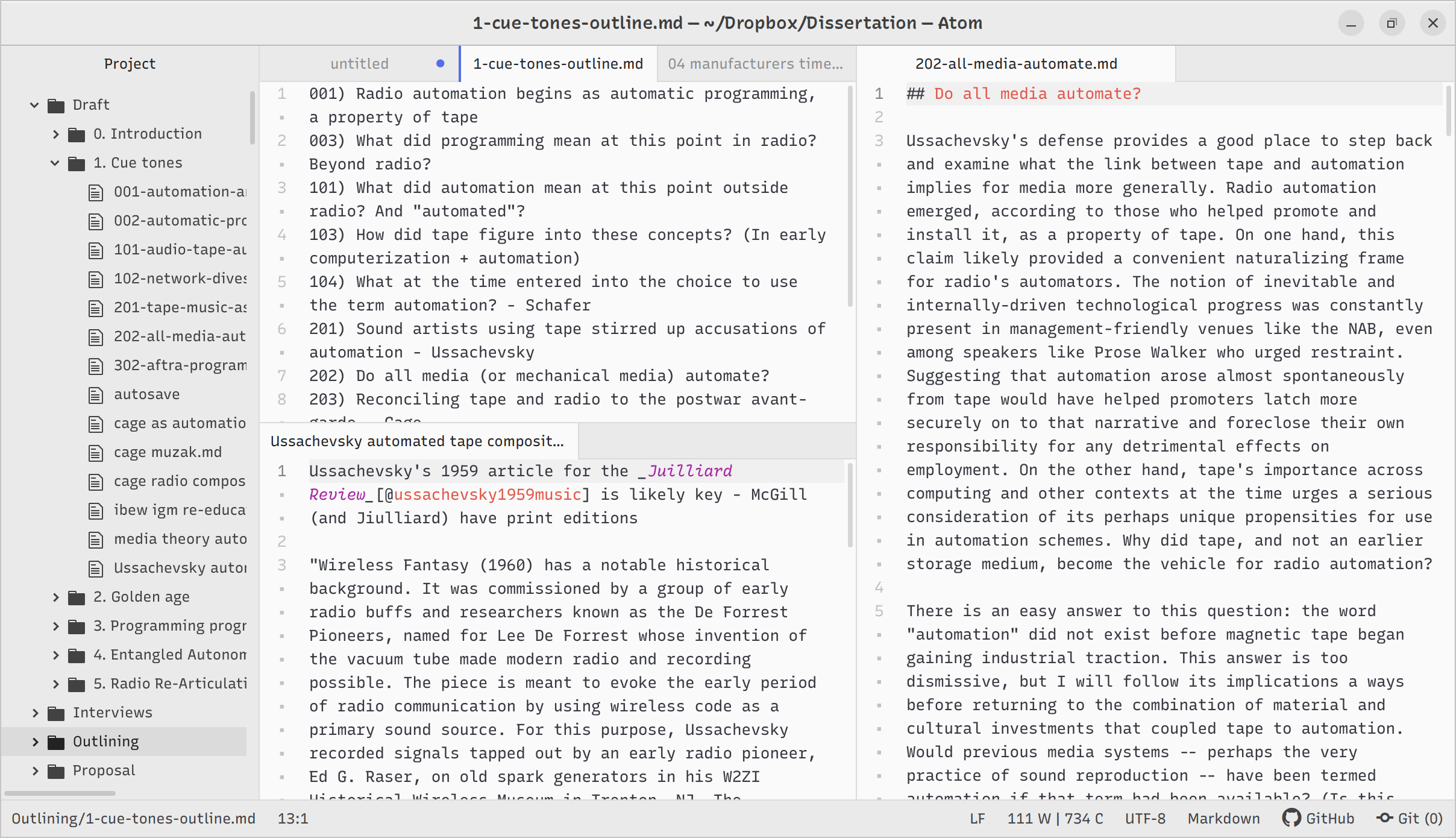

The old setup in Atom

The old setup in AtomThe big appeal of a plain text workflow for me is that it’s just files in folders. It reduces some minor but massively-recurring frictions (e.g. clicking all the way through to a new blank document in Word, or wondering which larger document a note belongs in) that stand between noticing an idea and storing it on my hard drive. The resulting heap1 of text files are then readily searchable from the regular file browser on any operating system, and they’re also very transportable – I sync my Notes and Dissertation directories to Dropbox, where they use up a tiny fraction of the free storage space. They move between computers and software applications without any need for format conversion. When my old MacBook called it quits heading into my fourth PhD year, I just set Dropbox up on the refurbished Ubuntu laptop that replaced it; this automatically retrieved all my reading notes as well as the Dissertation > Snippets directory that had been slowly but steadily growing over the first three years whenever I spotted or thought of something that seemed like it belonged. Around that time I started sorting these short text files into “chapter” buckets as I got a better sense of the dissertation’s structure. Then, when I really got underway with writing the thing itself, my work consisted of filling in the gaps between these fragments – some of which I had totally forgotten about in the time since jotting them down.

Then, in summer 2022, Atom got its curtain call. Its maintainer, GitHub, had been acquired by Microsoft in 2018. In announcing that it would sunset Atom, GitHub touted “new cloud-based tools” including Microsoft’s Visual Studio Code as superior alternatives. The decision seemed pretty transparently driven by Microsoft’s desire to shepherd users into a single program, VS Code. It also treated Atom’s user base as entirely made up of coders, which is incorrect. VS Code seemed like a very unappealing alternative for these reasons, but I decided to give it a try after someone on Mastodon pointed me to the open-source version that bypasses Microsoft’s telemetry. This version, VSCodium, did impress me as an alternative to Atom. Most features I’d enjoyed were available, and the coding-specific features didn’t impinge on the writing experience to the extent the application’s name suggests they would. A good number of packages, including Zotero integration, were available and even easier to install than in Atom. Despite these pleasant surprises, I couldn’t shake two sources of unease. First, while I suspect it would be pretty easy to port my own Atom packages over to Codium, I didn’t like the idea of uploading my work to the Microsoft Marketplace that now served as the repository for extensions. Second, I fear that Microsoft plans to next pull the plug on the desktop versions of VS Code in favor of shepherding users into an in-browser application – all the emphasis on “cloud-based tools” in the death-of-Atom announcement seemed like cause for concern. I’m very uninterested in relying on internet access to work on my writing projects and don’t want to have to switch editors again anytime soon. So I decided to look in a dorkier direction.

For a while, and especially since switching from Mac OS to Linux, I’d had a nagging interest in switching from Atom to a tool with more free/open-source software cred. Atom wasn’t perfect: its underpinning framework, Electron, essentially means that a whole web browser is running in the background, making it more resource-intensive than a text editor should be. There was also the association with GitHub and now Microsoft that became more salient by contrast when most of the software I now used was maintained by volunteer communities. I wasn’t interested in moving into the command line, but I was vaguely aware that Emacs, an editor that has been around for an epoch and is still going strong, was able to split the difference between the bare bones GUI-less approach and something more elaborate. I also saw people post about fantastical productivity-enhancements to academic writing and just to daily computer tasks through customizing their Emacs setups (there seem to multiple Emacs equivalents to Selisker’s guide; I didn’t dive quite far enough in to be able to recommend a particular one). Paul Ford’s delightful reflection for Wired on software configuration upped the intrigue, though in hindsight it probably should have been a warning. Wanting to get up to speed quickly and replace the basic workflow I had used in Atom, I decided to try a tool called Doom that morphs Emacs into a full-featured modern editor by curating a set of packages for it.

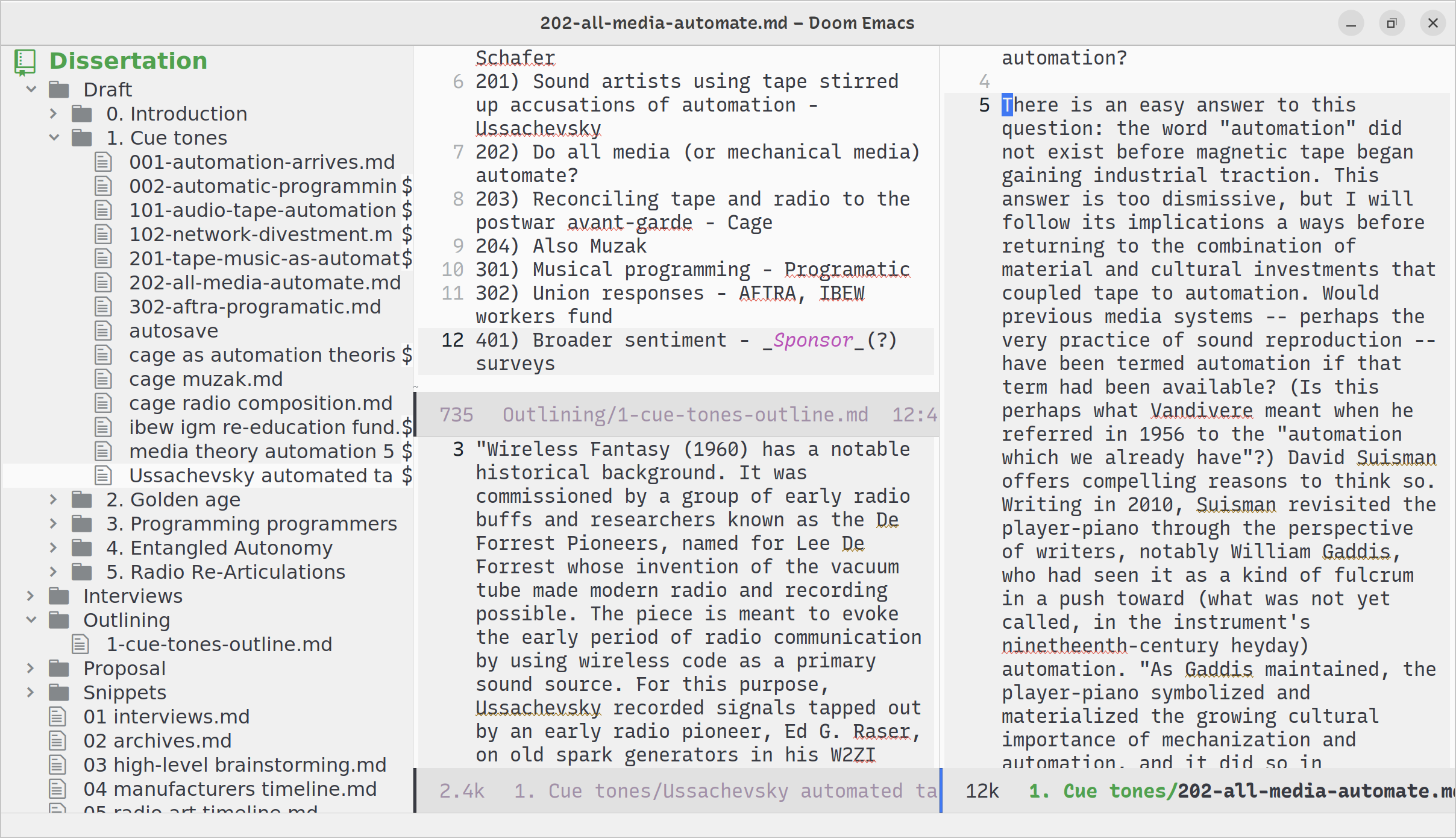

Reproducing my setup in Doom Emacs

Reproducing my setup in Doom EmacsWhile I ultimately found Emacs and Doom frustrating, setting it up was a good provocation to reflect on which features I really want in a text editor and why. To Doom’s credit, they were just about all available by activating (un-commenting) module lines in its configuration file. They include:

- A simple file browser sidebar: I tend to jump back and forth between two or three snippet/section files while I’m writing, so my directory contents act as kind of a mini-outline in addition to a navigator. Treemacs, a package that Doom includes as one of its modules, provides this.

- Split-window arrangements of multiple files: having a draft and its corresponding outline open side-by-side, perhaps along with a relevant note in a third partition, is really handy and helps me steer my writing without breaking the flow. Treemacs has a nice set of keystroke commands that will open a file in the spot you want it.

- Easily togglable full-screen mode: writing without any other OS elements or notifications visible really does reduce distraction for me. The Zen package, also included with Doom, is designed to do this.

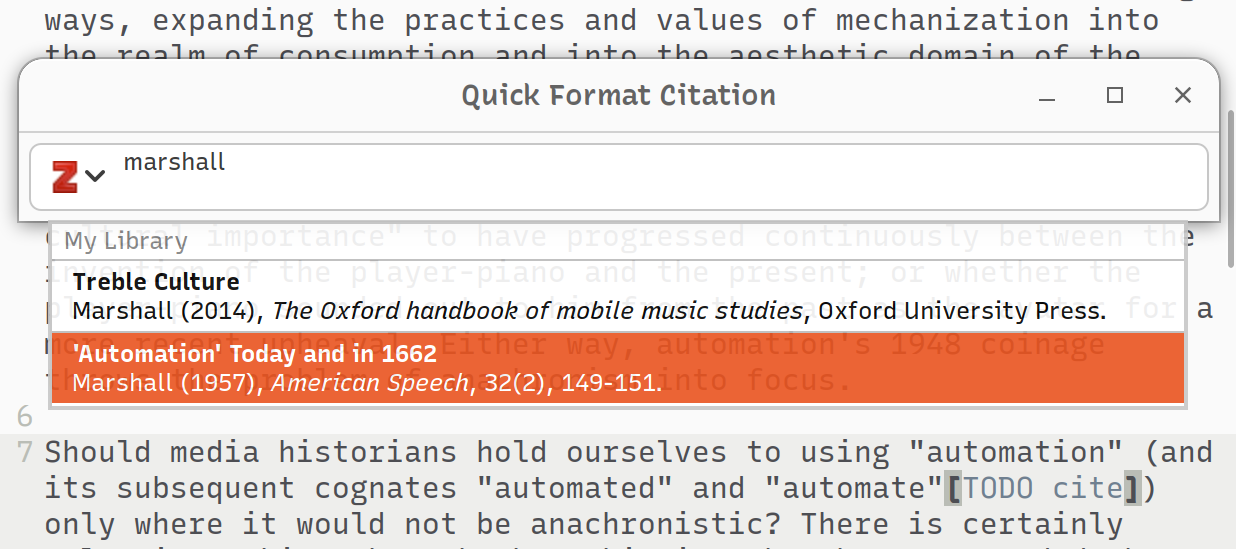

- A way to quickly pull citation keys from my Zotero library into the text file (as explained in Selisker’s post, the workflow uses shorthand references generated by a Zotero plugin called Better BibTex and then expands these into full citations when you compile your document from markdown to Word/PDF/etc.). The Doom-included tool to do this is Citar, which was a little more complex than what I was used to but which worked well.

- Text cleanup for quotes pulled from a PDF: I mentioned above that I made my first Atom package to do this; it turns out that once you get used to not needing to manually repair line breaks / hyphenations or change typographer marks (

“) to standard subquotes (') all the time, it becomes very annoying to do it again. I couldn’t find an Emacs package that does this. I assume that it would take two, maybe three lines of elisp code in the Emacs configuration file do add this feature. But for me, unfamiliar with lisp and already a little weary at the Emacs learning curve, it didn’t feel like an easy addition.

My problem with Emacs, ultimately, was that I liked my exisiting operating system and didn’t want to run what turned out to be a whole other operating system inside of it just to write. All the tools I use outside the text editor, including Zotero, the file browser, PDF readers, and even email, could be subsumed by Emacs with the right configuration. I got far enough in to understand why this is very appealing to the right kind of user but also to be somewhat repulsed by it myself. That feeling was largely because, despite Doom saving me the work of tracking down the right modules, these add-on features didn’t seem to interact particuarly well with one another. Zen-mode, for instance, only did what I wanted it to do if I remembered to toggle Treemacs on before toggling fullscreen. I’m still confused about how the Projectile package communicates with the Workspaces package, which can retrieve your document windows from a previous session but evidently can’t re-open Treemacs. The highly modular architecture was great in theory but proved confounding in practice, with the added frictions that the overall interface lacked visual coherence and that the Doom documentation uses a playful tone that I found irritating when trying to troubleshoot. I started to feel the benefit of not relying on a mouse/trackpad that would come with learning Emacs’s powerful key-command interface, but I also questioned whether it was worth the confusion of practicing different shortcuts for basic operations like undo, copy, and paste that I use in other programs all the time. The thought of spending enough time to learn the Emacs way of doing things so that I could use it properly started to become exhausting. It occurred to me that maybe I could go in the other direction – instead of something more complex and robust than Atom, maybe I could use something simpler and rely on other tools for more features, instead of continually extending the central tool.

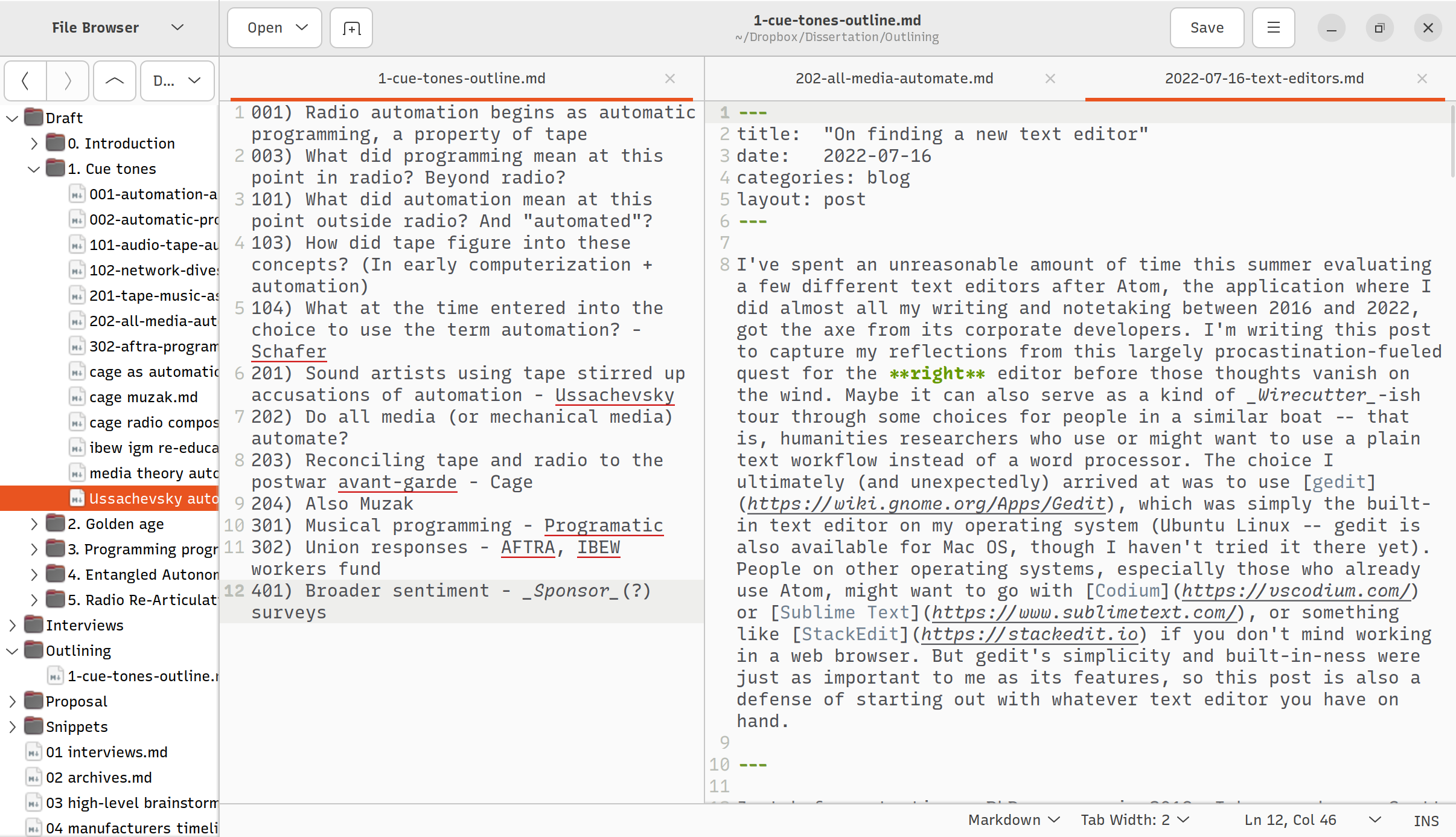

My current gedit view (with the additional gedit-plugins Linux package)

My current gedit view (with the additional gedit-plugins Linux package)This was my thinking when I took a closer look at gedit, the default text editor for the GNOME desktop environment that had come bundled when I installed Ubuntu.2 gedit does a good job of presenting a bare bones interface when you first open a file, somewhat analogous to TextEdit in Mac OS but without that program’s rich text default. It turns out, though, to have a streamlined but impressive set of plugin features available a few clicks away. One of these provided exactly what I was looking for: the built-in “external tools” plugin lets you assign a keyboard shortcut to a small script in Bash, the language the command line terminals on Linux and Mac OS use by default. In other words, the editor helps you launch a little micro-program you’ve designed and that you could just as easily launch outside the program (but it makes the document/selection contents available as input for that micro-program and lets you pop its output in at the current cursor position). This meant I could pretty quickly reproduce my PDF-quote-cleaning add-on, which used 187 lines of Javascript as an Atom package, as a single-line Bash script (albeit with a very unattractive cluster of regular expressions):

xclip -o -selection clipboard | tr '[\n\r]' ' ' | sed -E -e "s/- //g" -e "s/[ \t]*$//" -e "s/[\"\“\”]|[\’\‘]+/\'/g"(This command pulls the current clipboard contents, removes any line breaks, removes the hyphen-space sequence left over in any line-wrapped words, trims any spaces/tabs from the start and end, then replaces any typographer quote marks and/or double quotes with standard single-quote marks for subquotes.)

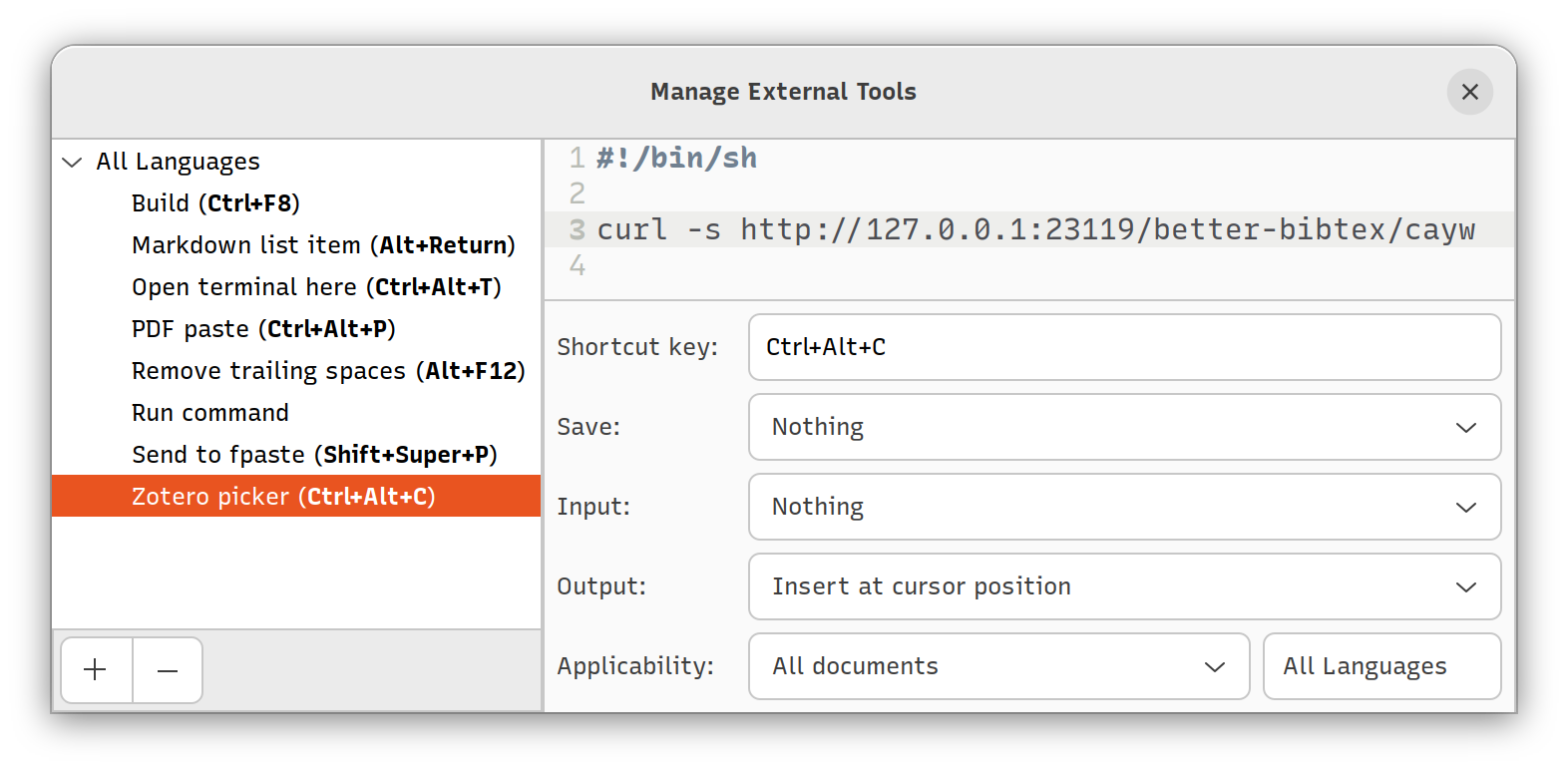

I got even more excited when, after searching for a gedit-Zotero interface and coming up empty, I eventually realized that this too could be built in a single line. Thanks to an elegant design choice in Better BibTex, the Zotero plugin that I had already been using, it turned out that the following suffices as an external tool to pull up a Zotero entry picker and pop the selected citation key in at the gedit cursor:

curl -s http://127.0.0.1:23119/better-bibtex/cayw?format=pandoc\&brackets=true(This command uses a local http request to ask Better Bibtex to launch the citation picker popup, which waits for the user to pick an entry and then returns its key, formatted so pandoc will find it and turn it into a citation at compile time.)

The external tool for Zotero integration and the popup it launches

The external tool for Zotero integration and the popup it launchesI very much hope that gedit won’t fall out of maintenance any time soon, but even if it does, the work I’ve done so far to customize it for my needs won’t have been a tremendous lost investment; it’s been both highly straightforward and highly reusable. I do feel a little sad to miss out on the much livelier user community around Emacs, which includes people making and using some very impressive workflows around academic writing. But at the same time, I’ve always wanted my workflow to be more legible to the smaller community of peers who inspire my actual research and writing. Using a text editor that has been a built-in standard for years, that fits in well with its surrounding operating system, and that is intended to interact well with outside tools rather than to replace and subsume them, feels like a step in that direction.

-

I’ve enjoyed reading the recent blog-dialog between CJ Eller and Eric Stein, via assemblag.es, on whether such accumulations are unhelpful garbage heaps or a healthy part of a “compost epistemology.” Eller’s initial post resonated with my reluctance to try wiki-building/knowledge-mapping tools like Obsidian that in most ways look really appealing. Even deciding what words warrant links/entries is more deciding than I want do in the course of dropping a note or snippet into the pile. At the same time, simple text search across these files, along with their tiny sizes, has made me comfortable “embracing the heap.” ↩

-

As of the latest GNOME version, gedit is no longer the default text editor. The new GNOME Text Editor application is visually beautiful and well designed, with great Markdown support. Unfortunately, its developers are opposed to adding gedit’s external tools capability to the new editor, reasoning that any users in search of “advanced features” will want to use the coding environment GNOME Builder instead. Builder is also very well designed, but far too software-development tailored to work well with writing projects. It’s a bit frustrating, as with GitHub’s Atom announcement, to see non-coding uses and users fall through the cracks in these considerations. ↩

-

A call for audio contributions: 25 Hz

Short version: Record a couple seconds of a steady sound (e.g. from a synthesizer or software audio tool) whose fundamental pitch is 25 Hz. Send any recordings to me by email to andykstuhl@gmail.com. I will use your sound(s) in a radio artwork and include you in its list of contributors.

Any suitable sounds I receive by May 9 (2022) will be used in the project.

Long version: In radio, “automation” refers to a system that can carry out a sequence of different sounds by starting and stopping the right audio sources at the right times. Today these sources are typically digital files, but in the 1950s, they were tape machines. Radio automation began as the technique of adding “cue tones” to recorded sound. When a special tape player detected one of these tones, it would stop the tape and start another machine playing. In this way, automation systems could interlace music with advertisements and station announcements to make up a continuous broadcast program. From 1953 up into the era of syndication by satellite, almost every automation system used the same frequency for its cue tones: 25 Hz. Hovering just above the lower bound of the purported “normal” human hearing range (20 Hz to 20,000 Hz), 25 Hz had to be filtered out of the broadcast signal on its way from automation system to transmitter. Here in this subtracted spot in the audio frequency spectrum, all the seams in a seamlessly automatic signal hid from hearing.

The piece I’m building for Wave Farm’s Radio Artist Fellowship will open up radio automation through its history and through those seams. The backbone of the piece will be 25 Hz cue tones that, instead of triggering seamless transitions, jarringly punctuate a collection of automation-related recordings. Because 25 Hz is so far into the bass register, a simple sinusoid tone would likely be inaudible in most listening environments. I will therefore need to use sounds that include overtones atop the 25 Hz fundamental. Rather than choose one arbitrary timbre to produce that more complex tone, I’d like to treat this problem as an opportunity for others to add sounds to the piece, which will cycle through a wide variety of these tones. These contributions will be credited but not financially compensated, so I hope it’s something people with the necessary means will consider spending just a few minutes on if interest strikes. If you have a synthesizer, DAW, tuner app, or other audio generator handy, I would be grateful for any and all little snippets of 25 Hz you care to send my way.

Thank you! —Andy

-

Reflections on Pauline Oliveros and Software in Art

The composer Pauline Oliveros, who passed away recently at the age of 84, leaves behind a many-threaded legacy of musical adventuring amid vigorous support for colleagues, as Geeta Dayal documents in an obituary for Frieze. In all these pursuits, and in her writing, Oliveros’ voice was harsh in its directness and uplifting in its clarity — the fruit of a rare commitment to distilling avant-garde ambitions into simple vessels. Her series of “sonic meditations” makes that effort particularly apparent. In these poetically honed written works, she addresses the reader with instructions that, when followed by a group, bring the musical piece into being and the participant listeners into transformative states of awareness. I first learned about Oliveros in an intro computer music course when we performed one of these pieces, Teach Yourself to Fly.

Teach Yourself To Fly

dedicated to Amelia Earhart

Any number of persons sit in a circle facing the center. Illuminate the space with dim blue light. Begin by simply observing your own breathing. Always be an observer. Gradually allow your breathing to become audible. Then gradually introduce your voice. Allow your vocal cords to vibrate in any mode which occurs naturally. Allow the intensity of the vibrations to increase very slowly. Continue as long as possible, naturally, and until all others are quiet, always observing your own breath cycle. Variation: translate voice to an instrument.

Both times I’ve taken part in Teach Yourself to Fly, I’ve felt the piece operating on me at a level untapped by any other musical experience. Nodes dedicated to breathing, vocalizing, listening, and social interrelation were all pulled up from the tangle of my brain, which slowly reorganized itself around them. I was floored that the piece could achieve such a deep-reaching effect and such a complex sound structure through just a few carefully worded instructions. It was only later, when considering Oliveros’ work alongside more recent projects in interactive music, that I saw her text scores as a revelatory move for composition in the era of computational media. Like a software program, a sonic meditation was composed of instructions. A program only takes its intended shape at the moment of its execution by the host environment, whose condition affects and is changed by the process of interpreting those instructions. Oliveros sculpted her sonic meditations from the same material as software, but located her process in the consciousness of her listeners. In doing so, she crafted a tool for resisting the pull of computation toward displays of power and for holding on to the promise of interpretation as the act that artists can imbue with the richest substance.

In an essay titled “Software for People,” Oliveros describes a turning point in her artistic trajectory: a refining of the sonic meditations practice toward the goal of training other musicians to modulate their attention between “global” and “focal” states. The title on its own points out that her sonic meditation pieces are of the same stuff as computer code, and she refers in the essay to her piece as a “program” for the high school woodwind section performing it. More significantly, she notes that the “program allows for failures in the system to have a positive function.” In her compositions, Oliveros cared less about the eventual output — the audible result of a program’s execution — and more about the transformation of the interpreter. As Kerry O’Brien writes,

In these works, experiments were not conducted on the music; the music was an experiment on the self. Anyone searching today for the complete box set of “Sonic Meditations” won’t find it, because, as the composer wrote, “music is a welcome by-product” of this composition. The experiments remain in each listener.

At its core, computation offers artists control over an instruction-interpreter that makes no errors and that can translate measurement into action at speeds outside the grasp of human comprehension. It makes sense, then, that many software-based artworks have focused on harnessing those new capacities for responsiveness and multiplicity toward their intuited ends of producing delight or awe in the audience. These works, even the ones we might describe as interactive or participatory, use machine-executed processes to impress a sensation upon the human subject. Oliveros, in marking her text scores as “software for people,” mapped out a very different agenda for works that center on the following of instructions: the phrase “for people” at once moves interpretation from the machine to the person and remakes the configuration between piece and listener as for rather than upon. In her emphasis on attention, Oliveros uses keywords (“global”, “state”) that crop up early on in most computer science courses; what unites these terms is that they don’t describe the output of a process but rather denote the nature of its enactment inside the machine.

Oliveros’ notion of “software for people” imparts to computational art the principle that interpretation itself is a more fertile ground for transformation than the output of an interpreted process. Her example asks us to resist the tendency of computational media to conceal the interpretation at work inside the machine and to bring the viewer or listener into a process of co-interpretation — rather than just interaction — with the piece. She paved the way for artists to use instructions as a material addressed in tandem to listeners and to machines. With the same strokes, she made a convincing case for sound as the vehicle through which that process of simultaneously interpreting instructions and experiencing their result could best occur.

One recent work that deploys the above principle particularly well is Reiko Yamada’s Reflective. Yamada’s interactive musical work takes a new form in each of its installations, with different audio samples and new settings for the listener inspired by the site, but at its consistent base is a project of ushering the listener into a state of reflection on choice and consequence. In an installation of the piece in Cambridge, Mass. earlier this year, Yamada set up a dark, curtained-off space for listeners to enter one at a time into their encounters with the motion-tracking sound piece. She explains that she hoped the darkness would deter gallery-goers from a certain attitude toward the piece: many of us first approach an interactive work with an urge to test the extent and parameters of our influence in the system. In contrast to that frantic mode of scrambling for control, Yamada wanted her listeners to be listeners — to make slow and thoughtful movements and to follow the music into a state of meditation and decision.

In the hallway leading up to that listening chamber, the first wall displayed a diagram of the space, surrounded by instructions. “1. Enter the circle, slowly, alone,” they began, and shifted subtly from physical directions into primers for the intended state of mind: “Each decision leaves traces on your sonice experience…. These traces are your own, imperfect as they may be.” The second wall, surprisingly, was crammed with snippets of code and connective lines routing motion sensor inputs to Max/MSP objects. This laying bare of Reflective’s software structure seemed, at first, out of keeping with Yamada’s goal to steer visitors away from approaching the piece as an instrument. Then again, if visitors were to follow certain directions, why shouldn’t they get the full set — including the ones a computer would follow to co-create their experience? The visibility of these symbols as a sketch, as work-in-progress notation, replaced any sense of the piece’s software component as a sleek black box with an impression of a loose, imperfect cluster of inputs and effects. Most importantly, it emphasized that the unseen part of the piece was formed out of instructions, the same kind of stuff with which the visitors themselves had just been outfitted. Exposing the algorithmic insides of her piece thus made perfect sense as a way for Yamada to beckon visitors away from a power relationship with the piece and onto a stage of active listening.

Despite their shared goal of shaping their listeners’ attention in sound, brought about in part by each musician’s experience with Buddhist meditation practice, no explicit link connects Yamada’s Reflective to Oliveros’ work. Yet Yamada’s computer-reliant interactive piece evokes to a striking degree the aims of the low-tech sonic meditations; and in so doing, it proves how forward-looking Oliveros was with that body of work. “Software for people,” we can be sure, didn’t merely explain an existing process; it set a call for those who would be creating work in situations that had yet to come.

Oliveros, an artist working outside of computation (at the time she penned “Software for People”) yet keenly and proactively aware of its entrance into her field, supplied us with a question that we should hold up to all software-based artworks. The question, characteristically for Oliveros, is concise yet loaded with unfolding substance: Is this software for people? Often in new media art, the software component of a piece is designed to hide itself from sight, maintaining a powerful inscrutability as it reacts to or acts upon an audience. Works like Reflective cast aside the promise of power that attends computational media, instead seizing on these materials as opportunities to involve people in new interpretive situations. For artists working in software, the fact that instructions and their interpretation underly it all becomes quickly mundane among all the intricacies and layers of abstraction involved in writing code. It’s hard to retain a sense of that fact as remarkable at all, let alone to feel that the interpretation of instructions is a far greater site for groundbreaking artistic work than the upper reaches of algorithmic feats like simulation or streaming data. I know that for me, at least, Pauline Oliveros will continue on as the most potent reminder of that truth for a long time coming.